Migrating Internal Python Packages From GitLab to Gcloud Artifacts

This blog shares the journey of our migration from GitLab registry to Google Cloud Artifacts

At Docsumo, we recently transitioned our version control system from GitLab to GitHub. One challenge that emerged during this migration was the lack of native support for Python packages in GitHub. To address this, and given our infrastructure’s alignment with Google Cloud, we transitioned our packages to Google Cloud Artifacts.

Here are the detailed steps I followed to successfully migrate around 250 versions of internal packages from GitLab python registry to Google Cloud Artifacts.

Create a python registry

First, create a Python registry on Google Cloud Artifacts. You can do this using the Google Cloud Console interface by selecting python as the format and adjusting other options as required.

Alternatively, you can create the registry using the following command:

1

2

3

4

gcloud artifacts repositories create python-packages \

--repository-format=python \

--location=us \

--description="Python package repository"

You can change the repository name from

python-packagesto anything you prefer and adjust other settings as needed.

After creating the repository, your repository URL will look like this:

1

https://<PROJECT_LOCATION>-python.pkg.dev/<PROJECT_ID>/<REGISTRY_NAME>/

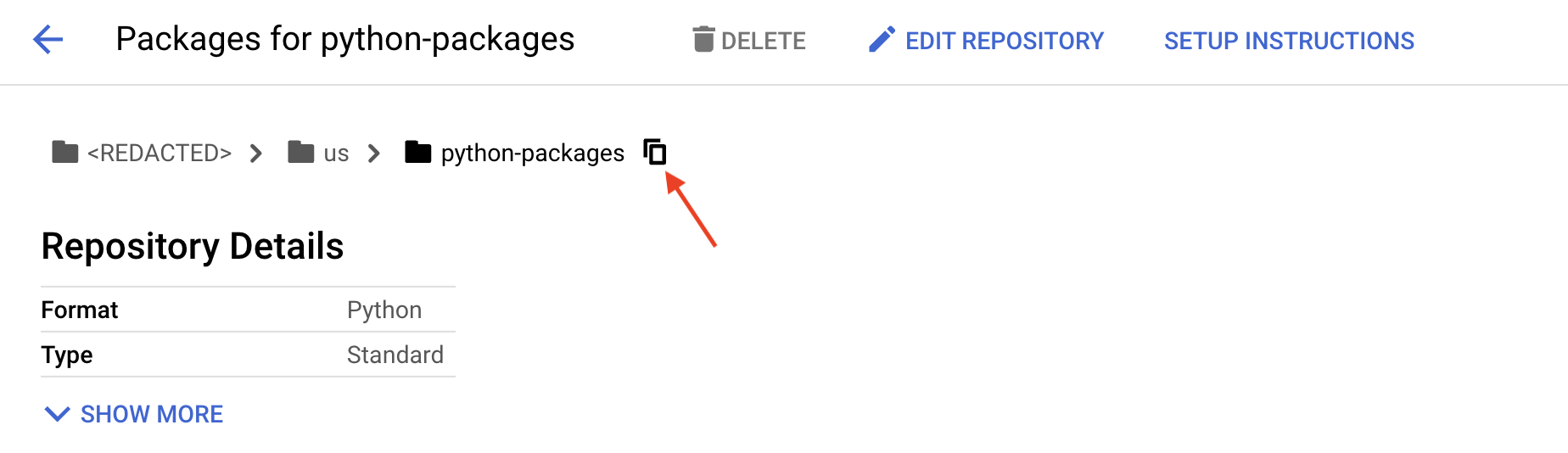

You can also copy the repository URL from the Google Cloud Console interface.

Next, set the repository URL in an environment variable, as it will be required later to upload the packages.

1

export REPO_URL=<REPOSITORY_URL>

Download the Packages

The next step is to download the packages from GitLab. Below is the python script that I used.

Set the GITLAB_USERNAME, GITLAB_TOKEN and GITLAB_ORG_NAME environment variables.

After executing the script, all of your packages will be downloaded to the specified directory as set in the script.

You can generate a GitLab token here; make sure to give the token

read_registrypermission.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

import requests

import os

GITLAB_USERNAME = os.environ.get("GITLAB_USER_NAME")

GITLAB_TOKEN = os.environ.get("GITLAB_TOKEN")

GITLAB_ORG_NAME = os.environ.get("GITLAB_ORG_NAME")

DOWNLOAD_DIR = "packages"

def get_all_group_packages(group: str, token: str):

"""Use Gitlab api to get all pakages and their metadata"""

url =f"https://gitlab.com/api/v4/groups/{group}/packages"

params = {

"per_page": 400,

"page": 0,

"exclude_subgroups": False,

"order_by": "project_path"

}

headers = {

"PRIVATE-TOKEN": token

}

while True:

response = requests.get(url, headers=headers, params=params).json()

if not response:

break

for package in response:

yield package

params["page"] += 1

os.makedirs(DOWNLOAD_DIR, exist_ok=True)

for package in get_all_group_packages(GITLAB_ORG_NAME, GITLAB_TOKEN):

package_name = package.get("name")+'=='+package.get("version")

print(f"Downloading {package_name}")

package_path = package.get("_links",{}).get("delete_api_path")

package_path = package_path.replace('https://','').rsplit('/', 1)[0]

index_url = f"https://{GITLAB_USERNAME}:{GITLAB_TOKEN}@{package_path}/pypi/simple"

command = f"pip download {package_name} --no-deps --no-build-isolation --dest {DOWNLOAD_DIR} --index-url {index_url}"

os.system(command)

Upload the packages

With all package wheels downloaded, the next step is to upload them to the newly created Google Cloud Artifact Registry.

Start by installing the required tools:

1

pip install twine keyrings.google-artifactregistry-auth

Then, navigate to the directory where the packages are downloaded and use twine to upload them to Google Cloud.

1

twine upload --repository-url $REPO_URL *.whl --verbose

After running the above command, the packages should start uploading to Google Cloud Artifact Registry.

I hope this guide helps you in making a similar transition smoothly. Feel free to reach out if you have any questions or need any assistance!